Science

Google and UC Riverside Unveil Advanced Tool to Combat Deepfakes

Researchers from the University of California – Riverside have partnered with Google to develop a cutting-edge system designed to combat the rise of convincing AI-generated videos, commonly known as deepfakes. This new technology, named the Universal Network for Identifying Tampered and synthEtic videos (UNITE), is capable of detecting deepfakes even when faces are not visible, addressing a significant limitation in current detection methods.

Deepfakes, which combine “deep learning” with “fake,” are media content created using artificial intelligence that mimics the appearance of reality. While they can be used for entertainment, their potential for misuse is alarming, as they can impersonate individuals and spread misinformation. As the technology for creating these videos continues to improve, so does the need for robust detection solutions.

Understanding the Limitations of Current Detection Methods

Existing deepfake detection technologies often struggle in scenarios where faces are absent from the frame. This gap highlights a broader issue, as misinformation can manifest in various forms, including altered backgrounds that distort reality. Traditional detectors may fall short in identifying these subtleties.

UNITE distinguishes itself by analyzing not just facial features but entire video frames, encompassing backgrounds and motion patterns. This comprehensive approach makes it the first tool capable of flagging synthetic or doctored videos without solely relying on facial content.

How UNITE Operates

The core of UNITE’s functionality lies in its use of a transformer-based deep learning model. This model detects spatial and temporal inconsistencies—nuances often overlooked by previous systems. It employs a foundational AI framework called Sigmoid Loss for Language Image Pre-Training (SigLIP), which is designed to extract features independent of specific individuals or objects.

A novel training method, termed “attention-diversity loss,” further enhances the system’s capabilities by enabling it to monitor multiple regions within each frame. This feature ensures that the model does not concentrate solely on faces, allowing for a more nuanced understanding of video content.

The collaboration with Google has granted the researchers access to extensive datasets and computational resources essential for training the model across a diverse array of synthetic content. This includes videos generated from text or still images—formats that often challenge existing detection technologies. Consequently, UNITE can flag a variety of forgeries, from simple facial swaps to complex, entirely synthetic videos created without actual footage.

The Importance of UNITE in Today’s Digital Landscape

The launch of UNITE comes at a critical time as text-to-video and image-to-video generation tools become widely available online. These AI platforms empower almost anyone to create highly convincing videos, posing significant risks not only to individuals but also to institutions and democratic processes.

The researchers presented their findings at the 2025 Conference on Computer Vision and Pattern Recognition (CVPR) in Nashville, U.S.. Their paper, titled “Towards a Universal Synthetic Video Detector: From Face or Background Manipulations to Fully AI-Generated Content,” details UNITE’s architecture and training methodology, underscoring its potential impact in the ongoing fight against misinformation.

As the landscape of digital content continues to evolve, tools like UNITE will be essential for newsrooms, social media platforms, and the general public in safeguarding the truth against the rising tide of deepfake technology.

-

Politics1 week ago

Politics1 week agoSecwepemc First Nation Seeks Aboriginal Title Over Kamloops Area

-

World4 months ago

World4 months agoScientists Unearth Ancient Antarctic Ice to Unlock Climate Secrets

-

Entertainment4 months ago

Entertainment4 months agoTrump and McCormick to Announce $70 Billion Energy Investments

-

Lifestyle4 months ago

Lifestyle4 months agoTransLink Launches Food Truck Program to Boost Revenue in Vancouver

-

Science4 months ago

Science4 months agoFour Astronauts Return to Earth After International Space Station Mission

-

Technology3 months ago

Technology3 months agoApple Notes Enhances Functionality with Markdown Support in macOS 26

-

Top Stories1 month ago

Top Stories1 month agoUrgent Update: Fatal Crash on Highway 99 Claims Life of Pitt Meadows Man

-

Sports4 months ago

Sports4 months agoSearch Underway for Missing Hunter Amid Hokkaido Bear Emergency

-

Politics3 months ago

Politics3 months agoUkrainian Tennis Star Elina Svitolina Faces Death Threats Online

-

Politics4 months ago

Politics4 months agoCarney Engages First Nations Leaders at Development Law Summit

-

Technology4 months ago

Technology4 months agoFrosthaven Launches Early Access on July 31, 2025

-

Top Stories3 weeks ago

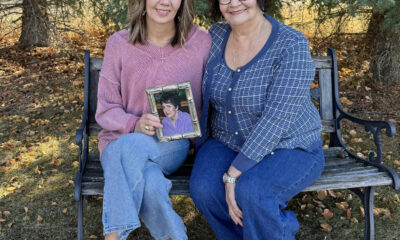

Top Stories3 weeks agoFamily Remembers Beverley Rowbotham 25 Years After Murder