OSNABRÜCK, GERMANY – A groundbreaking development in artificial intelligence has emerged as researchers unveil neural networks that better replicate the human visual system.

Immediate Impact

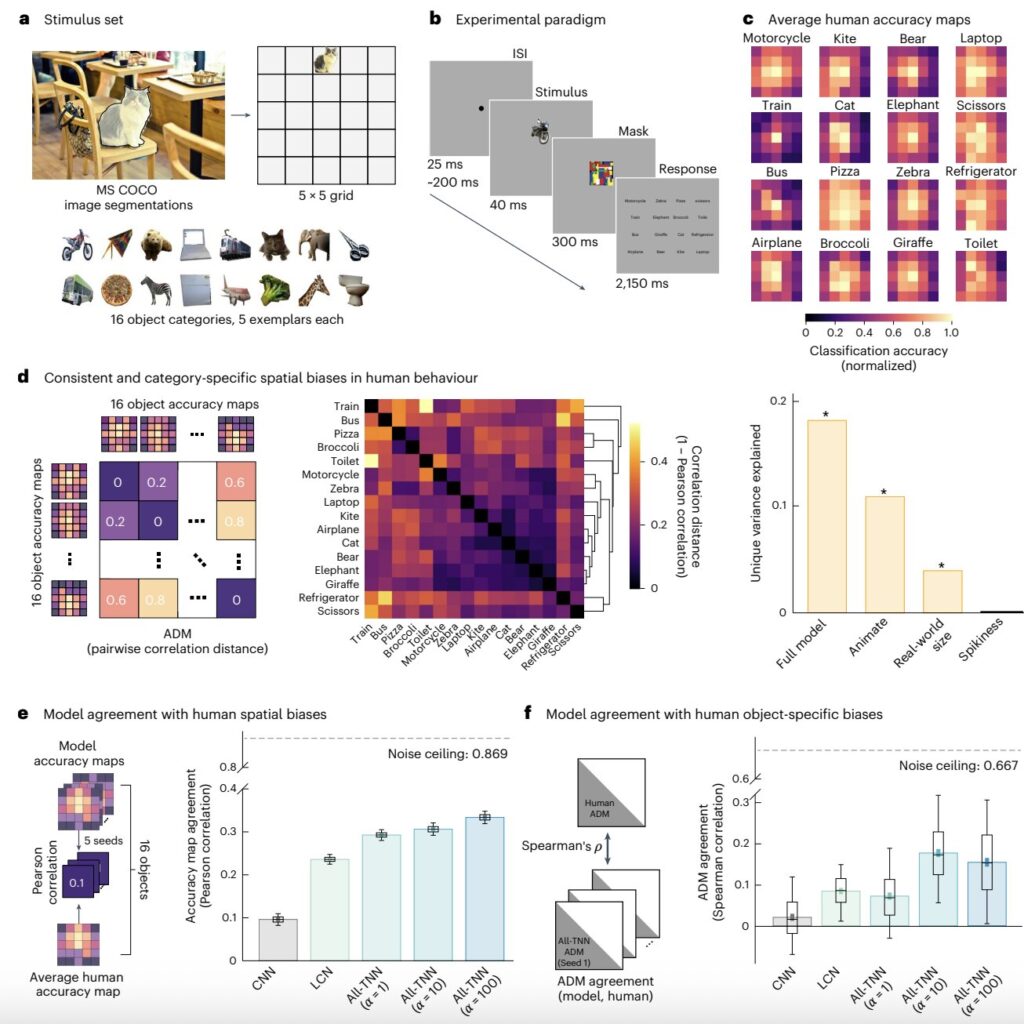

In a significant stride for neuroscience and artificial intelligence, researchers at Osnabrück University, Freie Universität Berlin, and other institutions have introduced a new class of artificial neural networks (ANNs) that more closely mimic the human visual system compared to existing models. These all-topographic neural networks (All-TNNs) are detailed in a recent paper published in Nature Human Behaviour.

Key Details Emerge

All-TNNs are designed to address the limitations of traditional deep learning models like convolutional neural networks (CNNs) and recurrent neural networks (RNNs), which, although powerful, do not fully align with the biological processes of human vision. Dr. Tim Kietzmann, senior author of the study, explained that while CNNs excel in transferring learned features across different spatial locations, this is not how the human brain functions.

“The brain cannot ‘copy’ and ‘paste’ information from one location of the cortex to another,” Dr. Kietzmann noted.

Industry Response

The introduction of All-TNNs has sparked interest among neuroscientists and AI researchers, as these models not only replicate the organization of the visual cortex but also capture human behavioral patterns more accurately. This development builds on previous work in AI vision models but offers a more biologically realistic representation.

By the Numbers

- Published in Nature Human Behaviour (2025)

- DOI: 10.1038/s41562-025-02220-7

Expert Analysis

Dr. Kietzmann emphasized the importance of spatial organization in visual processing, which is a key aspect of the brain’s retinotopic structure. Unlike CNNs, which are not spatially organized, All-TNNs feature selectivity is spatially organized across a “cortical sheet,” allowing for a more accurate simulation of human vision.

Background Context

Historically, computational models of the human visual system have relied heavily on deep neural networks. However, as these models evolved to become more powerful, they diverged from accurately modeling biological processes. This development represents a significant shift from traditional methods, aiming to bridge the gap between AI and human biology.

What Comes Next

The potential applications of All-TNNs are vast, with implications for future neuroscience and psychology studies. These models could provide deeper insights into how feature selectivity across the cortex influences perception and behavior. Dr. Kietzmann and his team are currently working on improving the efficiency of these models, focusing on enhancing task performance and feature selectivity.

“We are currently trying to improve training to be more efficient in terms of task performance, as topographic networks are parameter rich,” Dr. Kietzmann added.

The research, led by Zejin Lu and colleagues, continues to explore the biological mechanisms that contribute to smooth cortical selectivity, which could further enhance the biological realism of these models.

As this innovative approach to modeling the human visual system progresses, it promises to open new avenues for understanding the neural underpinnings of perception and behavior, contributing significantly to both AI development and cognitive science.