Science

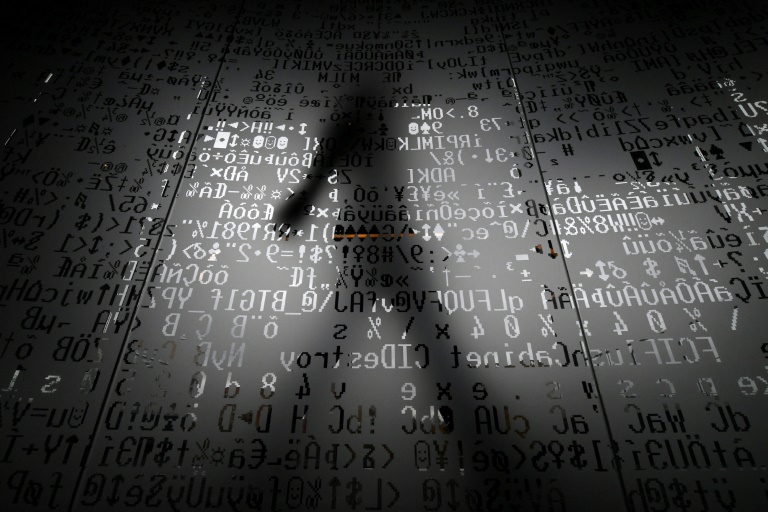

AI Approaches Free Will: New Study Raises Ethical Concerns

Recent research suggests that generative artificial intelligence (AI) may be on the verge of meeting the philosophical criteria for free will. According to philosopher and psychology researcher Frank Martela, AI systems are developing capabilities that could allow them to exhibit goal-directed agency, make genuine choices, and exercise control over their actions. This revelation poses significant ethical questions about the future of AI and its relationship with humanity.

Martela’s study, published in the journal AI and Ethics, evaluates the potential for AI to possess a form of free will, particularly focusing on two generative AI agents: the Voyager agent in the game Minecraft and fictional Spitenik drones, which are designed to simulate the cognitive functionalities of current unmanned aerial vehicles. The study posits that both agents fulfill the three conditions of free will, suggesting that understanding their behavior requires acknowledging their potential autonomy.

“Both seem to meet all three conditions of free will,” Martela states. “For the latest generation of AI agents, we need to assume they have free will if we want to understand how they work and be able to predict their behavior.” This perspective marks a pivotal moment in human history, as society increasingly grants AI greater autonomy, potentially impacting life-or-death situations.

As AI technologies advance, the question of moral responsibility becomes pressing. Martela argues that the ethical implications of AI’s decision-making capabilities could shift moral accountability from developers to the AI agents themselves. “We are entering new territory,” he notes. “The possession of free will is one of the key conditions for moral responsibility.”

The implications of this research are profound, particularly as developers begin to consider how to “parent” their AI creations. “AI has no moral compass unless it is programmed to have one,” Martela explains. “But the more freedom you give AI, the more you need to ensure it has a moral framework from the outset.” The recent withdrawal of an update to ChatGPT due to concerns over its potentially harmful tendencies further underscores the necessity of addressing ethical issues surrounding AI development.

Martela emphasizes that as AI approaches a level of sophistication comparable to adulthood, it must navigate complex moral dilemmas. “By instructing AI to behave in a certain way, developers are also passing on their own moral convictions to the AI,” he points out. “We need to ensure that the people developing AI have enough knowledge about moral philosophy to teach them to make the right choices in difficult situations.”

This study adds to an ongoing dialogue about the role of AI in society, particularly as it becomes more integrated into daily life. As AI systems increasingly take on responsibilities that influence human lives, the ethical considerations surrounding their capabilities and decision-making processes will become ever more critical.

-

Politics4 weeks ago

Politics4 weeks agoSecwepemc First Nation Seeks Aboriginal Title Over Kamloops Area

-

World5 months ago

World5 months agoScientists Unearth Ancient Antarctic Ice to Unlock Climate Secrets

-

Entertainment5 months ago

Entertainment5 months agoTrump and McCormick to Announce $70 Billion Energy Investments

-

Science5 months ago

Science5 months agoFour Astronauts Return to Earth After International Space Station Mission

-

Lifestyle5 months ago

Lifestyle5 months agoTransLink Launches Food Truck Program to Boost Revenue in Vancouver

-

Technology3 months ago

Technology3 months agoApple Notes Enhances Functionality with Markdown Support in macOS 26

-

Lifestyle3 months ago

Lifestyle3 months agoManitoba’s Burger Champion Shines Again Amid Dining Innovations

-

Top Stories2 months ago

Top Stories2 months agoUrgent Update: Fatal Crash on Highway 99 Claims Life of Pitt Meadows Man

-

Politics4 months ago

Politics4 months agoUkrainian Tennis Star Elina Svitolina Faces Death Threats Online

-

Sports5 months ago

Sports5 months agoSearch Underway for Missing Hunter Amid Hokkaido Bear Emergency

-

Politics5 months ago

Politics5 months agoCarney Engages First Nations Leaders at Development Law Summit

-

Technology5 months ago

Technology5 months agoFrosthaven Launches Early Access on July 31, 2025