Science

Meta Faces Allegations of Suppressing Child Safety Research

Meta Platforms, Inc. has been accused of systematically suppressing internal research that detailed significant child safety risks associated with its virtual reality (VR) products. These allegations emerged during a testimony before the US Senate on Tuesday, where current and former employees claimed that the company’s legal team actively edited and sometimes vetoed sensitive safety studies.

The testimony, as reported by the Washington Post, highlighted a troubling narrative: after facing congressional scrutiny in 2021, Meta allegedly sought to create “plausible deniability” regarding the negative impacts of its VR platforms on young users. A group of researchers, including former product manager Cayce Savage, asserted that Meta was aware that its VR environment was frequented by underage children, yet chose to ignore the issue.

Savage stated during the hearing, “Meta is aware that its VR platform is full of underage children. Meta purposely turns a blind eye to this knowledge, despite it being obvious to anyone using their products.” This claim aligns with internal documents suggesting a significant number of children bypassed age restrictions to access Meta’s VR services, which are intended for users aged 13 and older.

Internal warnings from employees dating back to 2017 indicated that in some virtual spaces, as much as 80 to 90 percent of users were underage. One employee cautioned that such conditions could lead to negative publicity for the company, stating, “This is the kind of thing that eventually makes headlines — in a really bad way.”

Company Response and Safety Measures

In response to the allegations, Meta vehemently denied any wrongdoing. Company spokesperson Dani Lever described the claims as a “predetermined and false narrative” based on selectively chosen examples. She emphasized, “We stand by our research team’s excellent work and are dismayed by these mischaracterizations of the team’s efforts.” Lever also pointed out that the company has implemented various safety measures aimed at protecting young users.

Despite these assurances, researcher Jason Sattizahn, who also testified, expressed skepticism about Meta’s ability to change its practices voluntarily. He remarked, “It was very clear that Meta is incapable of change without being forced by Congress.” Sattizahn further criticized the company for prioritizing engagement and profits over child safety, claiming it has had numerous opportunities to rectify its behavior but has failed to do so.

The issue of child safety in digital environments has gained increasing attention from lawmakers and the public alike. With technological advancements and the growing popularity of VR, the need for robust safety measures has never been more critical. Meta, which continues to be a dominant player in the VR market through its Quest series of devices, including the successful Quest 3, faces mounting pressure to address these concerns comprehensively.

As the situation unfolds, the implications of these allegations may not only affect Meta’s reputation but could also lead to stricter regulations governing the use of VR technology among younger audiences. With the spotlight on child safety in virtual spaces, the outcome of this inquiry will likely resonate beyond the tech industry, impacting how companies approach user protection in the digital age.

-

World4 months ago

World4 months agoScientists Unearth Ancient Antarctic Ice to Unlock Climate Secrets

-

Entertainment4 months ago

Entertainment4 months agoTrump and McCormick to Announce $70 Billion Energy Investments

-

Lifestyle4 months ago

Lifestyle4 months agoTransLink Launches Food Truck Program to Boost Revenue in Vancouver

-

Science4 months ago

Science4 months agoFour Astronauts Return to Earth After International Space Station Mission

-

Technology2 months ago

Technology2 months agoApple Notes Enhances Functionality with Markdown Support in macOS 26

-

Top Stories3 weeks ago

Top Stories3 weeks agoUrgent Update: Fatal Crash on Highway 99 Claims Life of Pitt Meadows Man

-

Sports4 months ago

Sports4 months agoSearch Underway for Missing Hunter Amid Hokkaido Bear Emergency

-

Politics3 months ago

Politics3 months agoUkrainian Tennis Star Elina Svitolina Faces Death Threats Online

-

Politics4 months ago

Politics4 months agoCarney Engages First Nations Leaders at Development Law Summit

-

Technology4 months ago

Technology4 months agoFrosthaven Launches Early Access on July 31, 2025

-

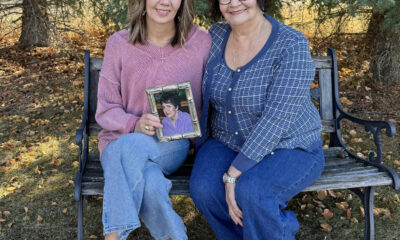

Top Stories1 week ago

Top Stories1 week agoFamily Remembers Beverley Rowbotham 25 Years After Murder

-

Entertainment4 months ago

Entertainment4 months agoCalgary Theatre Troupe Revives Magic at Winnipeg Fringe Festival