Science

State Regulators Act as AI Therapy Apps Surge in Popularity

The rapid rise of AI therapy applications has prompted state regulators in the United States to implement new laws aimed at overseeing these digital tools. As more individuals seek mental health support through artificial intelligence, the absence of comprehensive federal regulation has led to a fragmented landscape where state-level measures vary widely.

Illinois and Nevada have enacted laws prohibiting AI from being used to provide mental health treatment, while Utah has introduced limitations on therapy chatbots, mandating that they safeguard users’ health information and disclose that they are not human. Other states, including Pennsylvania, New Jersey, and California, are also exploring regulatory frameworks. Despite these efforts, mental health advocates and developers express concerns that the current regulations are insufficient to protect users or hold creators accountable for harmful technologies.

“The reality is millions of people are using these tools and they’re not going back,” said Karin Andrea Stephan, CEO and co-founder of the mental health chatbot app Earkick.

The diversity in state regulations complicates the legal landscape. Some applications, such as those offering AI-driven companionship, do not address mental health care explicitly. For instance, the laws in Illinois and Nevada impose steep fines—up to $10,000 in Illinois and $15,000 in Nevada—for products that claim to deliver mental health treatment. Meanwhile, many apps have chosen to restrict access in states with bans, leaving users without options.

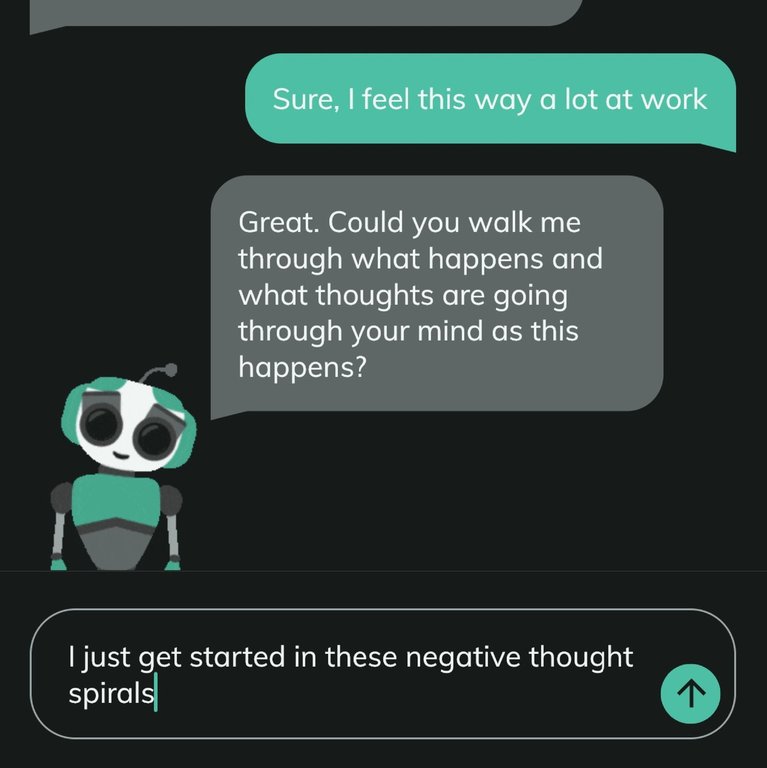

While some applications have embraced the title of “therapist” in their marketing, others have opted for terms like “empathetic AI counselor.” Earkick, initially promoting its chatbot as a therapist, reverted to describing it as a “chatbot for self-care” in response to regulatory scrutiny. “We’re not diagnosing,” Stephan emphasized, explaining the app’s functionality that encourages users to seek professional help if needed.

The Federal Trade Commission (FTC) has initiated inquiries into several prominent AI chatbot companies, including the parent firms of Instagram, Google, and ChatGPT, focusing on their impact on children and teens. Meanwhile, the Food and Drug Administration (FDA) is set to review generative AI-enabled mental health devices in November, a move that signals a potential shift toward increased federal oversight.

The challenges associated with regulating AI therapy apps stem from their varied nature. From “companion apps” to “AI therapists,” the definitions can be ambiguous, complicating regulatory efforts. Vaile Wright, who oversees health care innovation at the American Psychological Association, noted the potential of these apps to address the growing shortage of mental health providers. “This could be something that helps people before they get to crisis,” she stated, though she stressed the need for scientifically grounded applications.

A recent clinical trial conducted by a team at Dartmouth University explored the effectiveness of a generative AI chatbot named Therabot, specifically designed to treat individuals with anxiety, depression, or eating disorders. The study indicated that users experienced significant symptom reduction after eight weeks of interaction with the chatbot, which was monitored by human professionals to ensure safety and efficacy.

Despite the promising results, Nicholas Jacobson, a clinical psychologist leading the research, cautioned against the rapid rollout of AI therapy tools without thorough evaluation. He expressed concerns that strict regulations might hinder the development of carefully designed applications that could genuinely benefit users.

Regulatory advocates, including Kyle Hillman of the National Association of Social Workers, acknowledge the urgency of addressing the mental health provider shortage but argue that AI therapy is not a comprehensive solution. “Not everybody who’s feeling sad needs a therapist,” Hillman explained, emphasizing that for individuals grappling with severe mental health issues, relying solely on AI could be inadequate.

As the landscape of mental health technology evolves, the balance between innovation and user safety remains a critical concern. Regulators, developers, and mental health advocates continue to navigate this complex terrain, seeking a solution that adequately protects users while fostering advancements in AI therapy applications.

-

Politics4 weeks ago

Politics4 weeks agoSecwepemc First Nation Seeks Aboriginal Title Over Kamloops Area

-

World5 months ago

World5 months agoScientists Unearth Ancient Antarctic Ice to Unlock Climate Secrets

-

Entertainment5 months ago

Entertainment5 months agoTrump and McCormick to Announce $70 Billion Energy Investments

-

Science5 months ago

Science5 months agoFour Astronauts Return to Earth After International Space Station Mission

-

Lifestyle5 months ago

Lifestyle5 months agoTransLink Launches Food Truck Program to Boost Revenue in Vancouver

-

Technology3 months ago

Technology3 months agoApple Notes Enhances Functionality with Markdown Support in macOS 26

-

Lifestyle3 months ago

Lifestyle3 months agoManitoba’s Burger Champion Shines Again Amid Dining Innovations

-

Top Stories2 months ago

Top Stories2 months agoUrgent Update: Fatal Crash on Highway 99 Claims Life of Pitt Meadows Man

-

Politics4 months ago

Politics4 months agoUkrainian Tennis Star Elina Svitolina Faces Death Threats Online

-

Sports5 months ago

Sports5 months agoSearch Underway for Missing Hunter Amid Hokkaido Bear Emergency

-

Politics5 months ago

Politics5 months agoCarney Engages First Nations Leaders at Development Law Summit

-

Technology5 months ago

Technology5 months agoFrosthaven Launches Early Access on July 31, 2025