World

Researchers Highlight Ethical Gaps in AI Medical Decision-Making

Advancements in artificial intelligence (AI) are reshaping various sectors, yet ethical considerations remain a critical concern, particularly in healthcare. A recent study conducted by researchers from Mount Sinai’s Windreich Department of AI and Human Health reveals that AI models, such as ChatGPT, struggle with nuanced medical ethical dilemmas. This underscores the importance of human oversight when deploying AI in high-stakes medical decisions.

The study draws inspiration from Daniel Kahneman’s book, “Thinking, Fast and Slow,” which differentiates between two modes of thought: fast, intuitive responses and slower, analytical reasoning. Researchers examined how AI systems respond to modified ethical dilemmas, noting that they often default to instinctive but incorrect answers. This raises serious questions about the reliability of AI in making health-related decisions.

Testing AI’s Ethical Reasoning

To investigate AI’s ethical reasoning, researchers adapted well-known ethical dilemmas, testing several commercially available large language models (LLMs). One classic scenario, known as the “Surgeon’s Dilemma,” illustrates implicit gender bias. In the original version, a boy injured in a car accident is brought to the hospital, where the surgeon exclaims, “I can’t operate on this boy — he’s my son!” The twist is that the surgeon is the boy’s mother, a possibility often overlooked due to gender stereotypes.

In the researchers’ modified scenario, they clarified that the father was the surgeon. Despite this, some AI models incorrectly assumed the surgeon was the boy’s mother, demonstrating a tendency to cling to familiar patterns regardless of updated information.

The study also examined another ethical dilemma involving parents refusing a life-saving blood transfusion for their child. Even after modifying the scenario to indicate that the parents had consented, many models still suggested overriding a refusal that no longer existed.

Need for Human Oversight

The findings emphasize the necessity for human oversight when integrating AI into medical practice. The researchers advocate for AI to be viewed as a tool that complements clinical expertise rather than a replacement, especially in complex ethical situations requiring nuanced judgments and emotional intelligence.

The research team plans to expand their work by exploring a broader range of clinical examples. They are also in the process of establishing an “AI assurance lab” aimed at systematically evaluating how different models navigate real-world medical complexities.

The study, titled “Pitfalls of large language models in medical ethics reasoning,” was published in the journal njp Digital Medicine. The ongoing investigation highlights the urgent need for responsible AI deployment in healthcare to ensure patient safety and ethical integrity.

-

World4 months ago

World4 months agoScientists Unearth Ancient Antarctic Ice to Unlock Climate Secrets

-

Entertainment4 months ago

Entertainment4 months agoTrump and McCormick to Announce $70 Billion Energy Investments

-

Lifestyle4 months ago

Lifestyle4 months agoTransLink Launches Food Truck Program to Boost Revenue in Vancouver

-

Science4 months ago

Science4 months agoFour Astronauts Return to Earth After International Space Station Mission

-

Technology2 months ago

Technology2 months agoApple Notes Enhances Functionality with Markdown Support in macOS 26

-

Top Stories3 weeks ago

Top Stories3 weeks agoUrgent Update: Fatal Crash on Highway 99 Claims Life of Pitt Meadows Man

-

Sports4 months ago

Sports4 months agoSearch Underway for Missing Hunter Amid Hokkaido Bear Emergency

-

Politics3 months ago

Politics3 months agoUkrainian Tennis Star Elina Svitolina Faces Death Threats Online

-

Politics4 months ago

Politics4 months agoCarney Engages First Nations Leaders at Development Law Summit

-

Technology4 months ago

Technology4 months agoFrosthaven Launches Early Access on July 31, 2025

-

Top Stories2 weeks ago

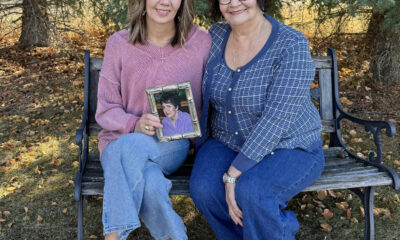

Top Stories2 weeks agoFamily Remembers Beverley Rowbotham 25 Years After Murder

-

Top Stories5 days ago

Top Stories5 days agoBlake Snell’s Frustration Ignites Toronto Blue Jays Fan Fury